The first thing I thought about when reading was the Disney movie WALL-E. I loved the movie and the romance that went on between Wall-e and Eva. But what I didn't like was all the obese humans on a spaceship full of super advanced technology that did everything for them. And I absolutely hated the sad looking destroyed uninhabitable earth. Every time I watch it I worry a little in the back of my head. Could the human race really get to that point? Technology is helpful and has changed the world in so many ways. But at what point is it harmful? Is it already significantly harmful without us knowing?

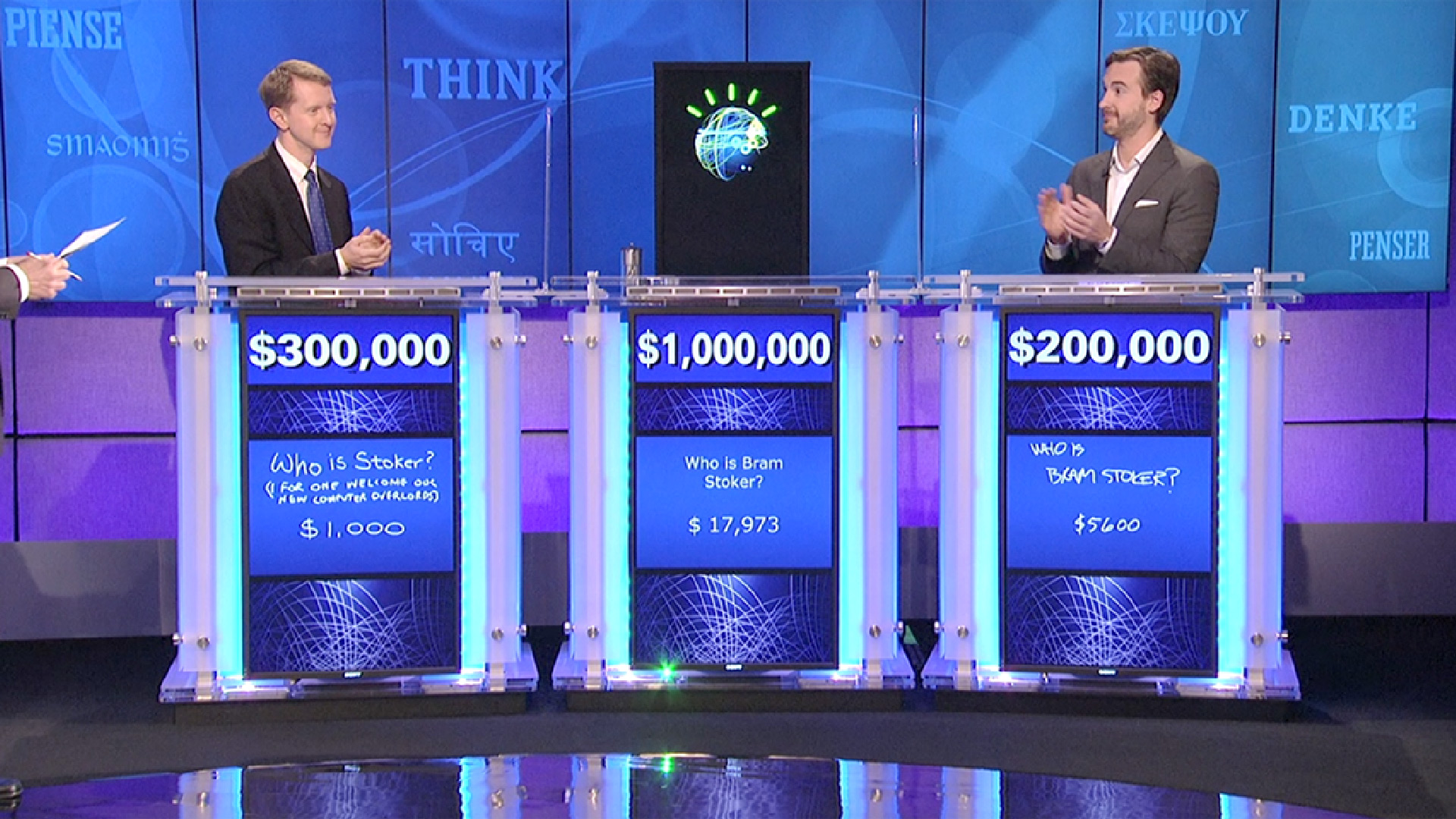

The first thing I thought about when reading was the Disney movie WALL-E. I loved the movie and the romance that went on between Wall-e and Eva. But what I didn't like was all the obese humans on a spaceship full of super advanced technology that did everything for them. And I absolutely hated the sad looking destroyed uninhabitable earth. Every time I watch it I worry a little in the back of my head. Could the human race really get to that point? Technology is helpful and has changed the world in so many ways. But at what point is it harmful? Is it already significantly harmful without us knowing?This chapter of Monster Culture actually made the world's rapid advancement of technology seem like something we really need to be aware of and it's quite scary. There was a real emphasis on the fact that "technology promises perfection and victory over death." After the chapter went on the "victory over death" part started to make more sense to me. The fact that technology could mean immortality is a weird concept. Technology is becoming more and more amazing to the point where we have robots that are astonishingly artificially intelligent and one day, robots could really become "virtually indistinguishable from humans." In WALL-E some of the robots actually did start to take over humans until humans were able to overcome them. When will there be a day when we can't over come them? Is there a need to make robots so smart that they can manipulate us?

The whole immortality was very pointless to me once I read further in that "when [a] body is destroyed, [its] memory, [its] consciousness, will be transmitted to a new one." I believe that you are not becoming immortal at all. Everyone is unique and just because you transfer someones memory into a piece of technology doesn't mean they will be the same person. I feel like there is a better way to explain it, but it was touched on later in the chapter. All of a sudden if everyone can become immortal then "everybody is nobody." We will all just become one in a whole sea of memories when have been ripped out of its unique rightful owner in which they can only interpret and express.

When Freud theory of opposed energy flows of sex drive and the death drive came up I just thought it was a fancy way of talking about our natural urge to reproduce, which is essentially our way of not dying. But what I really didn't understand was how death of ourselves is like "blending and fusion of separate objects." And how does a "self- contained individual" dissolve into "continuity?"

Overall I enjoyed how different this read was, it turned technology into a monster that humans have created. So doesn't that mean we are our own monster?

Sources:

http://giphy.com/search/wall-e

Levina, Marina, and Diem-My T. Bui. Monster Culture in the 21st Century: A Reader. New York: Bloomsbury Academic, 2013. Print.